The problem is that the vast majority of emergency radio traffic vanishes into the air, never captured, never analyzed. Every day, thousands of hours of voice communication flow through emergency services, field operations, and command centers. Most of it is never correlated with incident data. It's operational intelligence that disappears the moment it's spoken.

Design voice AI for operations, not just transcription. Real value comes from extracting actionable insights, not just converting speech to text.

I've spent years building voice AI systems for government agencies, including the US Coast Guard and DHS. The same pattern repeats everywhere: critical information transmitted, received, acknowledged, and then... gone. No record. No correlation. No learning. The framework for fixing this is straightforward: Voice to Context to Action.

The Problem: Voice as a Black Hole

In most operational environments, voice communication is treated as ephemeral. According to NIST research on emergency response speech recognition, the challenges of capturing and processing voice in field conditions remain significant. Someone says something, someone else hears it (hopefully), and that's it.

Consider what happens during a major incident:

- Dispatch: "Unit 47, respond to structure fire, 1234 Oak Street, cross street Maple."

- Field: "Dispatch, 47 on scene. Two-story residential, smoke showing from second floor."

- Supervisor: "47, what's your water supply situation?"

- Field: "We've got a hydrant at the corner, hooking up now. Requesting second alarm."

All of this information is valuable: location and type of incident, conditions on arrival, resource requests, timeline of operations. In most departments, none of it is captured systematically. Someone might take notes. There might be MDT entries. The radio traffic is recorded somewhere but never transcribed or analyzed.

After the incident, reconstructing what happened requires listening to hours of audio. Identifying patterns across incidents is nearly impossible. Training uses anecdotes instead of data.

Voice is the richest source of operational data, and we throw it away.

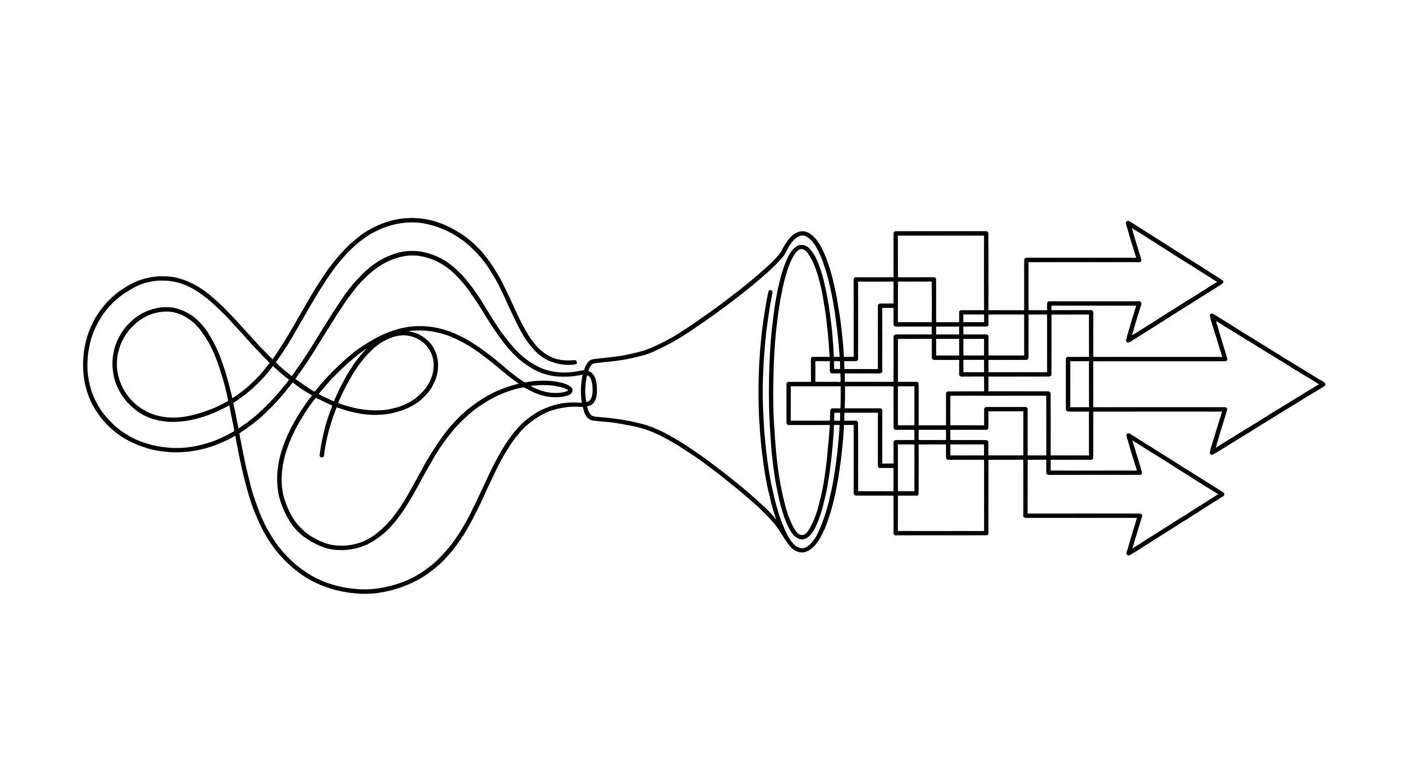

The Framework: Voice to Context to Action

Transforming voice from a black hole into an operational asset requires three stages.

Stage 1: Capture

Before you can analyze voice, you have to capture it reliably. In my experience deploying these systems for the Coast Guard and DHS, this sounds obvious but isn't. I've built voice capture pipelines that handle every format on that list, and each one has unique failure modes.

Sources to ingest: analog radio (P25, conventional FM, EDACS), digital radio (DMR, NXDN, TETRA), mobile PTT applications, dispatch CAD audio, phone lines (911, administrative), and body-worn devices.

The challenges: multiple simultaneous channels, variable audio quality, different encoding formats, network latency variations, gaps and dropouts. Capture isn't just "record the audio." It's synchronize across sources, handle format differences, and maintain timing accuracy—all in real-time with sub-second latency.

Stage 2: Interpret (Context)

Raw transcription isn't enough. "Structure fire at 1234 Oak Street" is text. What you need is structured data:

{

"event_type": "structure_fire",

"location": {

"address": "1234 Oak Street",

"cross_street": "Maple",

"coordinates": [47.6062, -122.3321]

},

"conditions": {

"structure_type": "residential",

"stories": 2,

"smoke_visible": true,

"smoke_location": "second_floor"

},

"resources": {

"units_on_scene": ["47"],

"units_requested": ["second_alarm"]

}

}Interpretation involves:

- Entity extraction: Pulling out locations, unit IDs, times, conditions, resources

- Intent classification: Is this a status update? A resource request? A command? An acknowledgment?

- Context correlation: Linking this transmission to the incident, previous transmissions, CAD data, and GIS information

- Anomaly detection: Flagging things that don't match patterns—unusual locations, missing acknowledgments, escalating language

This is where domain-specific training matters enormously. Generic NLP models don't understand fire service radio protocols. They don't know what "second alarm" means. They can't parse "47 is 10-97 at the scene" without domain training.

Stage 3: Act

Data without action is just expensive storage. Interpreted voice needs to trigger operational responses.

Real-time alerts: Mayday detection with immediate supervisor notification. Resource requests with automatic CAD population. Condition escalation with commander notification. Missing check-ins with accountability flagging.

Guided actions: Recommended resource dispatch based on conditions. Suggested tactics from similar incidents. Automatic mutual aid requests when thresholds are met.

Post-incident: Auto-generated timeline reconstruction. Searchable transcript with highlights. Pattern analysis across incidents. Training scenario generation.

Why "Minutes Not Hours" Matters

In emergency services, the value of information decays rapidly.

Real-time (seconds): "There's a second victim on the third floor"—this needs to reach search teams immediately.

Near-time (minutes): Resource deployment decisions. Which units are available? What's the optimal response?

Post-incident (hours): What happened? What can we learn? This traditionally takes days.

Good voice AI compresses the timeline. Real-time alerts trigger within seconds of detection. Post-incident reports generate automatically. Pattern analysis that took weeks now happens overnight.

A fire can go from "smoke showing" to "fully involved" in four minutes. Getting the second alarm dispatched 90 seconds faster can mean containment versus total loss. Getting the mayday alert to the IC in 3 seconds instead of 30 can mean rescue versus recovery.

Building Domain-Specific Voice AI

None of this works with off-the-shelf ASR. As documented in academic research on real-time speech recognition for emergency services, generic models fail on:

- Radio protocols: "10-4," "copy," "roger"—these have specific meanings

- Unit identifiers: "Engine 47" isn't "engine forty-seven" in the transcript, it's a specific entity

- Locations: "Oak and Maple" is an intersection, not two separate words

- Jargon: "Second alarm," "working fire," "code 3"—domain vocabulary

- Phonetic alphabet: "Adam-12" not "Adam twelve"

Effective operational voice AI requires custom models trained on actual operational communications. Not actors reading scripts. Real radio traffic with noise, crosstalk, and chaos. Demo environments tell you nothing—what matters is whether the system works in your environment.

The Integration Layer

Voice doesn't exist in isolation. Operational voice AI needs to correlate with:

CAD/RMS systems: Every extracted entity links to the incident record. Unit statuses update automatically. Resources stay current.

GIS/mapping: Location references resolve to coordinates. Units and incidents plot on maps in real-time. Geofences trigger alerts.

Video feeds: Voice mentions of locations trigger relevant camera pulls. Body-worn video syncs with radio traffic.

Sensors: Smoke detectors, shot spotters, traffic monitors. Voice can reference sensor data, and sensor triggers can focus voice analysis.

The goal isn't to replace existing systems. It's to make voice a first-class data source that integrates with everything.

Privacy and Security Requirements

Voice data is sensitive. The DHS Science & Technology Directorate outlines specific requirements for first responder technology. Any operational voice AI system must handle:

- Data sovereignty: Data stays in authorized locations. No cloud processing without explicit approval. On-premise deployment options.

- Access control: Role-based access to transcripts and analysis. Audit logging of all access.

- Retention policies: Configurable retention with automatic purging. Compliance with records requirements.

- PII handling: Detection and redaction of personal information when required.

For government clients, this isn't optional. It's table stakes for even beginning the conversation.

Real-World Deployment Lessons

After 12 years building voice AI systems and deploying them for multiple government agencies, these patterns have emerged consistently. Here's what actually works:

Start with one channel. Don't try to capture everything at once. Pick the highest-value radio channel or dispatch frequency. Prove the system works there before expanding. Scope creep kills voice AI projects faster than technical problems.

Involve operators early. The people using the radios know what information matters. They know which phrases indicate escalation. They know the edge cases that break assumptions. Their input is worth more than any benchmark.

Accept imperfection. No voice AI system achieves 100% accuracy in operational environments. I learned the hard way that the question isn't "is it perfect?" but "is it useful despite its limitations?" A system that catches 80% of critical events is infinitely more valuable than no system at all.

Build feedback loops. Operators should be able to correct errors easily. Those corrections should improve the system. This isn't a one-time deployment—it's an ongoing refinement process.

The Bottom Line

Every year, operational failures happen because information didn't flow fast enough. The radio call that got missed. The escalation that wasn't recognized. The pattern that nobody saw.

Voice AI won't fix every problem. But it can make voice as valuable as every other data source. For field operations where voice is the primary communication channel, that transformation is essential.

The framework is simple: Voice to Context to Action. Capture everything. Interpret it into structured data. Trigger the right responses. Do it in minutes, not hours. The technology exists—the question is whether organizations are willing to treat voice as the critical data source it actually is.

"Voice is the richest source of operational data, and we throw it away."

Sources

- NIST: Speech Recognition in Emergency Response — Federal research on ASR challenges for first responders

- ScienceDirect: Real-time Speech Recognition for Emergency Services — Academic review of voice AI in public safety

- DHS S&T: First Responder Technology — Technology solutions for emergency communications

Building Operational Voice AI?

I've deployed voice systems for government agencies. Let's talk about what actually works in the field.

Get In Touch